Thursday, February 25, 2010

What do you do ?

The field guide decided to take them to the manufacturing department first. Yajur asked the chief engineer what they are doing ? The Chief engineer said they are building the most awesome mousetrap to catch a mouse. He explained that his team is responsible to make the trap so efficient that the trap closes in super-duper-speed as soon as the mouse enters the trap.

They went to the R&D department next, Yajur asked the same question. What do you guys do ? The Head of R&D explained, you see, we are inventing the yummiest cheese to attract the mouse to the trap so that we can catch the mouse. Our research says that it is not the mouse trap but the Cheese which matters the most.

Next, they went to the marketing department. What do you guys do ? The Marketing Chief said that his job is to put the mouse trap in the most colorful boxes so that people can like the package and buy the mouse trap to catch the mouse. He also said he conducts market research on 'positioning' the mouse trap in the right place so that people can improve their chances of catching the mouse.

Next they went into a big awesome room with a nice view. The field guide explained that this is the 'Thinking' room where the CEO, the advisors and experts discuss and innovate. Yajur walked up to a expert/advisor and asked him what does he do. The expert said, see little boy, we invent new ways to catch mice. Mousetrap is only one way to catch the mice. We provide solutions to come up with newer, better, cooler devices to catch the mice.

Grandpa and Yajur were driving back home. Yajur was all along looking confused, so grandpa asked Yajur whats' bothering him. Yajur questioned back hastily, "But, grandpa why do people catch mouse?", Grandpa said, to get rid of it as its' a pest in the house. More confused, Yajur quizzed again, but, do we need to catch the mouse to get rid of it? Grandpa smiled and knew that the day's wisdom has been delivered.

Saturday, February 20, 2010

Quantama Hiring Call - In Retrospect

The Job posting excerpt:

------

I am looking for people with entrepreneurial bent of mind to join the founding team of Quantama.com a mobile proximity company. I have the business case validated and have one of the largest retailers in India showing Intent to implement when the product is ready… Angel rounds are being vetted and talks in process… Have product case and prototypes in development…

The markets we are addressing are emergent and pervasive… A distant fortune is heard of…

I promise you that your experience shall be cast with risks, hardship, pain, sweat and blood. The journey will be turbulent given the economic times.

If you see providence where others see peril, do get in touch with me for more detail and I shall be glad to consider a sitting…

------

Friday, February 19, 2010

Serendipity is the Key.

Business-as-Usual is to work hard consistently, trying to cash out on the idea that made the business successful. They work hard at establishing Cliches. Cliches are good. They are 'time immemorial'. They express ideas in simple words, but they lack the freshness and eloquence of a magical orator. If you have read the blogs of Seth Godin or heard president Obama speak or have followed the marketing of Apple, they do not rely on Cliches. They break the mold, create new meaning, speak different truth, change the worldview, present their stories in a more innate yet excitingly new package. They are Contrarians. They are Serendipitous. Here is what Seth Godin has to say about Cliches

Imagine that you are a T-Shirt vendor with a inventory of T-Shirts made of several attributes. Lets say you have 4 sizes (S, M, L, XL) and 4 Colors (Red, Blue, Green, White). When you first set shop, you do not know how many of each T-Shirt variant to carry (how many of Blue-XL will sell?). So you start with 10 shirts per variant combination and open business. Now, the first month you sold all the Green-L shirts, some Blue-XL and none of the others. What do we do? We decided that Green-L sells more in this catchment and order a bigger inventory of Green-L and lesser of others (Finding out what is working and doing more of it). If for the next few months, the trend continues, then, going by the pace, you may land up being a Green-L T-Shirt vendor. Its obvious that the more Green-Ls you have, the more your sales-report shows that you have sold the same. You already know whats wrong in this analogy. Yes, its a simple example, its so easy to see. Its a no-brainer.

But then, how come you do not see this analogy in the business strategies you follow ? How come you are not trying to find out what else your consumers are willing to try ? Why do we intellectualize for ages on why something "may not" work because "your" past data provides facts to your beliefs. Is it Fear ?

Start-ups on the other hand does not fear to be serendipitous (In a way, they have nothing to loose). An entrepreneur identifies an opportunity to make meaning. A passion-fruit colored T-Shirt, A Vanilla-Sky colored Tshirt. Try something afresh yet innate. Change the fabric, the texture, the weaving, the grain count, whatever... But be eager to explore, break the Cliche, turn it upside down. This is why it works and new tribes are formed. This why the most successful ones are Genre-Bending.

Every time you support your views on past-data, think again. Question yourself. Give Serendipity a chance. Believe in the art of possible.

Monday, July 27, 2009

Shared Consumer Data, Reciprocal Marketing and Conversions for Retail

To carry this to the next level, it is important for Retailers to come out of their shell and stop worrying about 'who owns the consumer data'. I keep getting into all sorts of debates and uncertainties of why Organized Retailers should or should not expose their Consumer data. The claim for not exposing is that, clean data is expensive and the IP differentiator for better conversions and loyalty for a given retailer, so the data should be owned and not shared.

But what is clean data ? How do you validate it ? How do you maintain it ? What is the cost of logistics ? What is the strategy for conversion ?

Clearly, the sum of cost of solving above hurdles for "clean consumer data" is significantly higher for the Retailer before gaining any benefits from such a data if each and every individual Retailer repeatedly manage their own datasets. This is why there is a half hearted effort by every Retailer. Nobody seems to have walked the whole mile (maybe except the Gas Agencies as its a regulatory body and they must validate). Consumer Loyalty forms, credit card records and Home delivery challans are the only validators existing today. Among this, credit card companies will not authenticate the consumer info fully. Payment gateways are a black box. Obtaining consumer psychographic details is a costly research and catchment exercise.

Instead, why not free the data ? Put it up on a "Consumer Data Cloud". Open the collaboration of consumer data by offering direct incentive to the consumers for maintaining and upkeep of their own info (with a shroud of privacy). Technology exists for this today.

Sharing the consumer data across segments shall be the next disruptive wave to change the Retail horizon.

The time has come to reap the benefits of open collaboration (Given that consumers are readily pouring their life's worth on Facebook, Twitter, MySpace and the rest of the Ning bases). Yes, privacy is a concern. Privacy must be the focus while collaborating such data independent of whether it is on a Retailer's platform or otherwise. I would go a step further and argue that privacy can be better managed, audited and assured if consumer data is managed as a single source of truth (single cloud). The cost of assuring privacy for such data for every Retailer on their own infrastructure is high and incredulous !

The benefits of freeing consumer data ?

- Pruning of costs across CRM which releases fairly significant chunk of capital. A portion of that capital can be re-purposed for interacting with the 'consumer data cloud' (smaller than the cost of managing your own dataset)

- Single source of truth both for the Consumer and the Retailer. Consumers especially can have a single pane of glass across all their Retail outlay (visits, purchase, spend, loyalty points). Retailers of course benefits from higher analytics and trending across consumption lifecycle of the consumers.

- Enablement of a Co-Opetition framework where Retailers can co-operate as well as compete for the Consumer's attention. This is where true reciprocal marketing evolves. Cross loyalty, cross segment combo kits (dinner and a movie package), targeted promos, cross segment redemption schemes will be more meaningful and rich in ideas and innovation.

End result? better engagement, targeted touches, hand holding across consumption lifecycle, point discounts, higher redemption and yes, absolute conversions. Sounds like utopia, maybe not. But truly a step closer.

All this and more is possible only if Retailers break their shell and hatch. Its about time the data is free (as in freedom)...

Wednesday, July 22, 2009

The Worth of Value

Why is Value contextual ? Because, it is based on the end user, end-usage and the environment. This is a coarse grained dimension of Value. Let's delve into the intricacies further and understand this point.

Marketing gurus exemplify that other fine grained dimensions of value exists.

- Value is Relative; relative to alternatives available within the given context.

- Value is Perceptual; driven by the current senses.

- Value is Provisional; New information can change percepts.

Given these, the dimensionality of value from a economic perspective brings in the concept of the "worth" of value. The risk in acquiring a value target determines the worth of the target.

So are consumers really value-conscious or worth-conscious. I guess they are both. Once they (some how) determine that something is valuable, then they start seeking to find if its worthy. How can Retailers determine the worth of a value for the consumers? For one, the worth is driven by what the consumer perceives as intrinsic risk in acquisition of value. Mostly, the risk of "being wrong" in whatever sense it may be is the biggest risk I foresee in determining the worth.

What if I paid too much ? What if I could have got a better product at the same price ? Does this look good on me ? What does my spouse think of it ? Does this product convey a better meaning of me (Cool, Smart, Sharp) ? Is it hygienic ? Do I have place to keep it ? etc... etc... are the risk driven questions in the consumers mind.

The exploding choice of products providing same/similar value is going to add to the "risk quotient" in determining the worth of value. The lesser the choice, the higher the worth. The higher the worth, the higher the price.

In essence, it does boil down to offering value to consumers across all dimensions while making it worthy (Branding). Especially from the dimension of 'Value being provisional' where the Retailers provide differentiated clarity for the value being offered. Providing that differentiated clarity should start way early in the consumption life-cycle. Typically the consumption life-cycle from a consumer perspective (Not the retailers perspective) is across the phases of Awareness, Research, Transaction, Delivery and Consumption. It is primarily important that the clarity is provisioned through out this lifecycle.

Providing clarity requires personalized touches and individual reciprocation with each of your consumer. Knowing the consumer including likes, dislikes, opinions, past purchases, current context, psychography etc. along with the intrinsic knowledge of the product assortments offered is a requirement. Building a value/worth grid based on this knowledge is essential. Engaging the consumer interactively through differentiated (not different) marketing channels is important. I guess this is the place where technology should gear-up to enable the "Experiential Economy" to make the while worth.

Convergence of Proximity based Technologies, Social Media, Collaborative Filtering, CRM and other such Simulacrum may offer probable solution. It shall be some while before true convergence can happen. Disruptions in marketing channels, technology, loyalty programs, product management and category management may be inevitable before it gets worthy more than valuable.

Wednesday, July 08, 2009

The Experience Economy of Mobility and Convergence

Why ? Purely because of the disruptive nature of just having more bandwidth on majority of the handsets across social strata.

Experience economy as defined is the orchestrated events made memorable to the consumers which collectively by in itself becomes a "product". Flashback to the Movie "Minority Report" in which Tom Cruise walks through a shopping mall and based on his iris scan, he gets beamed with relevant promotions within the vicinity that is specific to his profile and custom tuned to his liking.

Though I will not get into the super cool ingredients that may go into making this sci-fi happen today (as I am ignorant), I am fairly confident that a similar (diluted) experience can be achieved using the Mobile devices. Ability to ID the consumer through the device, Cloud Compute servers to crunch and store relevant Consumer Info (considering privacy), Relevance algorithms (similar to what is being used in NetFlix and Amazon) exists in one form or other. The missing ingredient was ubiquity of bandwidth on mobile devices.

Bandwidth hurdle being removed (3G, Bluetooth 2.0), the play will boil down to "What is the best impact provided during the time of maximum exposure ?". The time of maximum exposure is when the consumers is in your store or the shopping mall, browsing.

As arcane and cryptic as it sounds, there in lies the key for many new entrants and start-ups opening up new market categories. Each player can significantly differentiate themselves by choosing the right attributes to own.

Quantama is one of such start-ups creating a new category in the mobile proximity space for Organized Retail. Stay Tuned...

Tuesday, July 07, 2009

Emerging Business Drivers and Orthogonal Validations

Impedance mis-match during knowledge transfer: When the knowledge transfer of the Domain has to flow from the Analyst to the developers and testers, the impedance mis-match in translating the knowledge in terms of articulating the nuances of the innovation and the capability of a tester (as per say) to understand and assimilate that knowledge into the respective Testcases is mind numbingly high. This high mismatch in what was expected to be built and tested and what landed up getting built and tested will lead to heartburns during acceptance scenarios. Demand for extreme traceability of Testcases and test steps to the requirements has increased rapidly.

Complexity of the Product: The choice of the technologies and frameworks and platforms used in manufacturing and building the solution also adds up to the complexity of the test cycles. ERP implementation, PDLC of a Enterprise Product, SaaS based Solution Delivery, Cloud Compute enabled solutions, Data Center Management, Legacy Integration etc, are not only rich in semantics from a Domain perspective but are also complex to assimilate to understand the breadth and depth of testing strategies required to validate and provide assurance of quality. Demand for pre-built adapters and catalogs which can readily integrate and work as expected during last mile integration is on the rise.

Heterogeneity of the Systems: Added to the complexity of the product, the IT eco-system in which these product operates today are made up of different systems from vendors such as IBM, Oracle, Microsoft, SAP, Sun, HP etc... (including home grown solution) that contains various platforms patched up together through ESB and SOA integration. Even the choice of Operating systems and hardware platforms have become varied that performing a configuration and version compatibility test for any given platform has started to look daunting. Virtualization and Automation is becoming the norm of the day.

Shrinking GTM and Focus on short gain cycles: Typically the Product development lifecycles and Go to market cycles are shrinking in the light of ever changing business dynamics. Every one wants to put the product out in the market as soon as possible capturing the customer share as soon to gain control on the changing business dynamics. Agility, it seems is paying dividends for such short GTMs and providing a quick ROI. SaaS based and On-line solutions are moving towards perpetual beta platforms which can rapidly adopt to the customers needs. This also holds true for ERP implementation cycles which are shrinking by the day. What used to take 5 years are now being reduced to 1 year implementation cycles with rapid customizations. Demand for baseline Testcases covering top few probable customizations of a large product base is increasing. Pre-built Test Content 'cartridges' are the need of the day.

Shrinking IT Budgets: Discretionary spend has been monitored more closely and also the overall IT budget is shrinking by the day. CFOs are breathing down the CTOs neck for efficiency and productivity for every dollar spent. This has lead to cost cutting in terms of support staff (people) and reduction in spend of applications and products (license). CFOs are moving away from making any large capital commitments at the outset impacting high CAPEX vendors. Converting the fixed costs to variable cost is the Financial Officers edict across LOBs. Demand for subscription based usage is on the rise.

Global Recession Driving Margin Pressures: Global recession being the new reality, the pressure on margins (not to mention survival) is high. Corporates are looking for operational efficiencies to increase the margins to retain the operating profits while the top line sinks. Increasingly corporates are betting on digitizing and automating all processes that can be automated which shall convert to cost savings by downsizing the cost centers. Demand for outsourcing the validation and assurance and SLA management is on the rise.

Demand for Highly Reliable Products and Service: The general tolerance for a good quality product has come down. Consumers are demanding 'excellent' quality products. In effect, what was excellent yesterday is just good enough today. Reliability and Relevance are the two parameters that are driving the world markets. If a product or a solution is not meeting the standards of 'excellence' then there is no place in the market for the solution. Corporates are trying to leverage machines (computers, robots, software) as much as possible to automate the core solutions. Automation unlike manual processes provides a high degree of reliability when employed through out the production cycle. Demand for metrics based reports with high degree of SLA while enabling automation is on the rise.

Regulatory Compliance: With the increase in the number of regulations in any given sector (HIPAA, SOX, GLBA etc...) the burden of certifying the product, platform, application or service has increased dramatically. This has led to the amortization of working capital from core production cycles (bread and butter cycles) to compliance activities. Given the same capital budget (which seemingly is shrinking as we speak), the number of activities in production has increased to cater to the compliance demands. Corporates again are seeking automated compliance testing tools to ensure certification which increases the operational efficiencies. The compliance requirements has made the corporates to refactor the dynamics of a verification and validation LOB from a cost center to a value center. Demand for compliance catalogs for verification is on the rise.

Increased Threats and Security Compliance: The threat levels have been ever increasing and the types and nature of threats have become innovative. Security Compliance has become a core activity of any validation cycles for products and solutions. Penetration testing, Functional Security, Security Standards Compliance etc... adds to the release and build test cycles as a natural PDLC flow increasing the number of core activities to be performed by QA. Corporates and QA departments are seeking automation platforms in these and other areas to release enough bandwidth of the existing people so that what has to be (and can be) performed as manual verification has enough people available to perform. Growing need for security verifications as part of the automation solutions is been on the rise.

What does these emerging drivers means for Verification and Validation process ?

Simply put, testing is not a gating function anymore. Testing has become an inherent QoS (Quality of Service) through out the life cycle of production or service delivery. Testing has become a change agent addressing risk early on in the lifecycle and continually assuring reliability, relevance, Security and compliance apart from providing functional acceptance and assurance for the product or service. Testing as per say has become a value creator and quality differentiator for the end product to provide the required edge to compete in the market place of excellence. Testing has become an "Orthogonal" platform, process and activity to cater to the demands of the new markets.

AutoCzar a test automation platform seems to be reinventing itself to cater to these emerging trends.

Monday, July 06, 2009

Mobile Call without getting Billed

The technology uses a "handover" protocol as reported whenever in the vicinity of a Wifi range. There is a application required to be installed on the phone that shall seamlessly switch between Wifi and GSM. Interesting and Exciting. Read more on this here...

Wednesday, July 01, 2009

New Venture (Quantama)

Finding seed capital during recession, working on positioning for new market, getting a core team in place and getting the first customer on board have its own share of anomalies. Added to that, learning of things that you may have seen or done before all over again for the new market realities is an exciting challenge an entrepreneur may grow up to appreciate. In fact there is a sense of vuja-de experience (things look new even though you may have done them before) this time around.

Deep down inside, I know that all the positioning I perceive for the product before hitting the market is a preparation in principle to be able to cope with the game changing realities once the product rolls to production. Eventually the market shall position the product. The trick is to plan enough to minimize the delta efforts to reposition. This time around, I 'seem' to have understood the philosophy of "Flow with the Go" as Guy Kawasaki puts it in 'The Art of Start'... But the best is yet to come...

As Frank Sinatra's Song goes:

"Out of the tree of life, I just picked me a plum

You came along and everything started to hum

Still its a real good bet, the best is yet to come

The best is yet to come, and wont that be fine

You think you've seen the sun, but you aint' seen it shine"

I am hoping that this time around that I see the sun shine...

Saturday, February 17, 2007

SaaS'y Benefits

Non-SaaSy Enterprise (Business as Usual):

In fact, since IT spend happens to be one of the key drivers which is driving the SaaS adoption, lets take a look at the average IT spend and the proportions of such spend broken down into line-items spread between the CAPEX (Capital Expenditure) and OPEX (Operating Expenditure) of the spend.

Here is a table on the cost breakups as per Gartner's survey on annual IT Staffing and spending:

70% of the IT spend comes from the OPEX per annum while only 22% comes from the CAPEX. Focusing on the OPEX, we can see that 32% of the OPEX is due to Internal Staff (which is also 22.4% of the whole IT spend) and around 21+17+14=52% of the OPEX coming from Hardware, Software and Telecom. here is a pie chart to display the breakdown.

Now this being the BAU (Business As Usual) cost break downs, how would making your business SaaS'y benefit your bottom line?

Benefits offered by SaaS Model

SaaS as defined is a outsourced model which allows services to be accessed through the internet remotely by the consuming organization. Saying this from a 'SaaS providers' perspective what are the benefits a provider can offer and how can the provider achieve the same? Some of the benefits proposed by the SaaS model are as follows:

- Service at Cheaper Cost

- Better SLA than internal IT (Scalability, Availability, Performance, Maintenance, Upgrades)

- Accountability

- Cheaper Cost: the SaaS providers can offer cheaper cost by employing what you call as a 'economy-of-scale'. A SaaS provider (based on the maturity scale he is in) does offer the same service to more than on customer. This allows the SaaS provider to share common utilities across multiple customers. This allows the provider to spread his cost across customers bringing down the overall cost of operation for the provider. The provider eventually passes on such cost reductions to the customer by charging lower for the Service.

- Better SLA: Similarly, the SaaS provider can employ clustering, grid computing or elastic compute cloud that is offered by some of the 'utility computing' enablers to leverage a managed and virtualized infrastructure to bring down the providers overall TCO (Total Cost of Ownership) of running his operations across customers. The economy of scales in virtualization of systems and elastic compute clouds (Amazon EC2) does offer exponential benefits as computing volume increases. This not only results in further reduction in cost of operations but also aids in better SLA offering. SLA's such as Availability, Scalability and Performance are inherent to such utility architectures.

- Accountability: Since SaaS providers play as external vendors, the legal obligations and contracts will implicitly be more stringent than the contracts with the internal IT. Also, Since SaaS providers are 'in business' selling the proposition to be profitable will have genuine interests to make the model equitable (I am not accusing the internal IT here). Saying this, the accountability also comes from the fact that the operational model of SaaS enables the consumer to get a metered/leased billing frequently (at the end of billing period) that allows a detailed break down on the usage of services. The service usage if subscription based is simpler to manage as against a 'transaction pricing' model. The transaction based pricing also refereed to as pay-per-use or pay-by-the-drink requires operational infrastructure available at the SaaS providers end which can enable such metering (more on this on further blogs). Such metered usage allows both 'throttling' of usage both by the consumer as well as the provider. A SRM (Service Relationship Management) office at the providers end will ensure constant support (with support SLAs) probably better than the internal IT (due to economy-of-scale again). All this ensures that "IT as a Business" is more realistic to run by being SaaS'y rather than trying to make the internal IT run there process as business.

Given these benefits, it makes sense to reduce the internal IT spend across the CAPEX and OPEX as shown and start considering IT as a 'service center' instead of a 'cost center'. The benefits of a reduced TCO as well of a higher ROI (being a service center) are seemingly guaranteed (again based on what maturity level of SaaS is being embraced and the overall IT policy). Rationalization of the IT Governance structure as well as obtaining executive sponsorships can be real hurdles for initiating the programs (as these benefits are not been qualitatively demonstrated by many vendors yet).

This said, Enterprise still needs to go through a paradigm shift in their operations to embrace SaaS into their ITEcosystem. Questions such as :

- Enterprise efficiency and operational changes for SaaS Vendor Management.

- Enterprise Integration of SaaS systems into strategic systems that are running internally.

- Integration and collaboration of SaaS systems across SaaS vendors.

- Enabling business process orchestration that is seamless across the IT Ecosystem.

- Regulatory Compliance rules for SaaS engagements.

- Enabling and Tracking of a 'Process Health Matrix' to monitor the overall benefits across enterprise IT ecosystem to see if transforming few LOB applications in to SaaS is not jeopardizing short/medium term goals of the enterprise.

Friday, February 16, 2007

The Long Tail of IT Spend

This graph compares the total average IT spending of organizations of differing organizational sizes. Notice that the graph has a 'Big Head' with high amplitude but is immediately followed by 'The Long Tail' of lower amplitude (or spending) organizations. Though this graph is not 'explicit' in denoting that given the universe of discourse of all organizations which spends on IT, The top IT spending organizations ('The Big Head' of the curve) will still fall short of the collective 'volume' of IT spend that occurs on The Long Tail. This is seemingly true because the 'number' of organizations with a high IT spend are relatively (significantly) smaller than the overall 'number' of organizations who are spending on IT. In short, the spend volume of small number of high-spend companies is lower than the spend volume of large numbers of low-spend companies (huh).

This theory holds true in other areas as well. Ex. The Amazon business models. Amazon as a service provider over the internet can cater to this long tail of business easily compared to the brick-and-mortar bookstores such as barnes and nobel or borders. The 'easiness' comes by the virtue of offering the 'book store service' over the internet. As amazon does not have to maintain in-store inventory, it can almost have a large warehouse of 'all' kinds of books and deliver it directly (probably from the factory warehouse in some cases) to the consumer. The 'Top Sellers' which are relatively few in number makes the big head, compared to the rest of the non-top-sellers which makes up the Long Tail. In 2005, 50+ percent of Amazon revenues came from the Long Tail. The last I checked (in 2007) this number was at 25+% (percentages are deceiving in the way you read them).

Here is another graph that explains the long tail of music industry that depicts the average number of plays per month for music available in Walmart and Rhapsody.

Coming back to the point of the Long Tail on IT spend, there seems to be a burning question (mostly as i see it) that needs to be answered about

Before answering that question, its worth taking a brief look at the SMB (Small and Medium business thats part of the long tail) market which makes up the IT Spend accounting to 150B$ (Yes thats estimated at 150 Billion Dollars as per IDC).

Its also worth reading the article titled 'The IT Market’s $150B SMB Long Tail', published by Frank Gens who is a senior VP of research at IDC.

Seemingly, both in the above article and also mostly elsewhere the answer to the question seems to lie in SaaS (Software as a Service). But can SaaS really answer the question to cater to the SMB long tail?... It remains to be hypothesized...

Monday, February 12, 2007

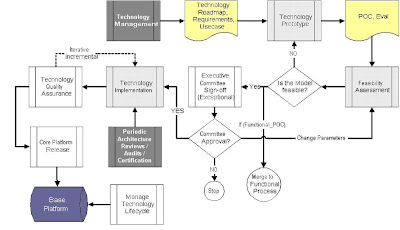

Architecture Process Framework

This 'process simulacrum' is not general purpose. In my mind, the process I will present in this article will be applicable to technical architecture process as against a overall functional PDLC.

(Caution: I have 'tried' to keep the article short, but have justified the definitions of some concepts, which makes it a rather longer version of a blog.)

This technical architecture process will emphasize the process workflows necessary to enable the “core platform” of a given product. The process will also help establish a “technology change management” practice within the product lifecycle. It will also accommodate any technical/technology POCs (Proof of Concept), early architectural spikes and prototypes required for the functional architecture of the product.

The overarching vision of this process simulacrum is to aid in objective evaluation of the architecture/technology in context so as to provide unbiased feedback to the organization to consider appropriate decisions during the overall product development roadmap.

Let us start with a workflow diagram that denotes the flow of process as well as artifacts (mixed into one). I have kept the flow as simple as possible so that its easy to augment the process into both predict frameworks such as RUP as well a agile frameworks such as Xtreme Programming.

Going further the blocks within the workflows for the given process is explained in detail.

1. Technology Management

The charter for technology management includes:

- Deriving a technology roadmap

- Establishing the roadmap within the organization

- Seeing through the execution of the roadmap and

- To optimize the roadmap based on changing product and technology requirements.

As an example, a technology roadmap is made up of:

- Technology compliance entities such as compliance for J2EE or .NET. (versions included)

- Third party integrations based on buy vs builds

- Migration paths such as Oracle 8i to Oracle 10g, J2SE 1.3 to J2SE 1.5 etc… (includes EOL derivations)

- Framework implementation milestones such as Struts, Spring, Hivemind,

etc - Tools implementation for roundtrip engineering, engineering performance, code coverage etc.

- Specification compliance entities such as JSR168, JSR170.

- Meta standards compliance such as CIM, DCML etc.

- Concept/Process compliance such as SOA, MDA etc…

- Domain specific implementations such as CMDB/SMDB, Multi tenancy.

- Core enabling module milestones (relevant to platform) such as security kernels, web services, edge integration frameworks etc.

- Compliance architecture for regulatory standards such as SOX.

1.1) Technology Requirements Analysis (TRA)

1.2) Technology Lifecycle Management (TLM)

1.3) Deriving Technology Roadmap

1.4) Buy vs Build Analysis

1.1) Technology requirements analysis (TRA) : this activity derives the technology requirements necessary for the product. Also, a technology usecase (where relevant) must be derived within this activity. A technology usecase is different from a functional usecase. In a technology usecase the product use of technology is depicted (Example framework, libraries, toolkits) as against a functional usecase. Deriving the technology requirement for the product is part art and part science. This is because the requirements are heavily bounded by three influencing factors namely

- Business need and value add: The business need is the heaviest driver for the technology requirement. The requirement must always materialize into dollars earned or saved. Economic feasibility analysis is the key for validating the technology requirement against the business need. (Sub-Activity: Economic Feasibility Analysis)

- Market maturity of the technology: The next priority would be to understand the industry maturity and acceptance of the technology. A bleeding edge solution in most cases is always pre-mature to implement. And not all legacies are always meant for a sunset. Understanding the market progress and industry acceptance for timing the technology is always a balancing act. (Sub-Activity: Market Maturity Analysis)

- Product readiness for the technology: Lastly the product readiness to implement the technology within the product needs to be established as a part of the process for validating the technology requirement. (Sub-Activity: Product Readiness Analysis)

1.3) Deriving the Technology Roadmap : As stated this is one of the core activities of technology management. Here a representation of the technology implementations, upgrades, migrations, and EOL (end of life) of the technology within the organization will be depicted based on a milestone within the roadmap. Mostly the roadmap will be driven through the technology requirement validated through the requirements analysis process. One of the other key input criteria for the technology roadmap is the product roadmap. The product roadmap acts a driver for the technology roadmap to validate the milestones for technology change.

1.4) Buy vs Build : One another important activity of technology management is to perform a buy vs build analysis for the product. This analysis can kick start a POC effort which emerges out of technology management discipline. The POC effort is depicted as a process stream within the technical architecture process workflows.

2. Technology Prototype

The technology prototype is a discipline or a process that enables activities for accomplishing architectural spikes or Proof of Concepts necessary to evaluate technology contenders (or architectural component) based on objective validations. The prototyping will be context specific and will be based on the nature of the POC required. The following are some case examples of the nature of prototypes:

- POC for a third party framework such as Spring or Hibernate.

- POC for a design threaded off from a functional implementation

- POC for a architectural component for platform services such as audit library, event managers

- POC for a tool such as code generators, transformers etc.

Core activities of Technology Prototype includes:

2.1) Concept Analysis

2.2) Architecture Analysis

2.3) POC Construction

2.4) POC Documentation

2.1) Concept Analysis : First level theoretical evaluation for the POC implementation spawning the appropriate context for POC implementation. This phase is more to rationalize the scope for POC so as to not get carried away or overwhelmed with requirements not germane to establish necessary objectives. This activity will also help rationalize if the POC is necessary in the first place.

2.2) Architecture Analysis : once a context and a rationale are established through concept analysis, it becomes necessary to analyze multiple styles of architectural designs. This activity is more iterative and incremental in nature. Architectural analysis at the prototype stage tends to be driven by an “Agile process” to accommodate quick success criteria to choose a specific design (or a couple of designs) to implement in the POC construction phase.

2.3) POC Construction : POC construction is also iterative and incremental in nature, more so driven by an agile process to evaluate the best design for a given requirement. The POC construction will implement multiple architectural designs and will concentrate on quickly throwing away bad designs in the process.

2.4) POC Documentation : POC documentation is the most important activity throughout the prototype discipline. All the decisions starting from concept analysis through construction must be well documented to understand the merits of the decisions. It is very much necessary to justify the decisions through documenting the pros/cons, merits/demerits, implications, risks/mitigation and trade-offs made while arriving at a decision. Any compromise on the documentation will either prove costly at the later stages due to lack of understanding of a decision, or will expend unnecessary effort to recursively re-justify the decisions through unproductive means.

3. Feasibility Assessment

The feasibility assessment stage is to assess the feasibility and viability of the POC. This assessment is different from the feasibilities done at the technology management process block level. POC feasibility is done within the context of the architecture feasibility or the design model feasibility used within the POC from a purely technical angle. This stage is iterative in nature. When a given model is considered not viable for implementation, quickly the model is thrown away and a better design is synthesized for consideration. Feasibility assessment is also incremental in nature.

For example, during POC feasibility analysis, the POC conclusions are carefully evaluated to understand the trade-offs and merits with respect to the:

- Does complexity affect the deadlines (Design Patterns, Architectural Style, Algorithmic)

- Risks involved and mitigation strategies.

- Product architecture readiness to augment the design under evaluation (architectural integrity).

- Is there any platform design changes required?

- Is there any functional design changes required?

- Cost of implementations including effort per resource type and cost of technology (tools etc.)

3.1) Assess the Complexity of POC.

3.2) Assess the effort of implementation.

3.3) Assess the impact of existing milestone.

3.1) Complexity of POC : Complexity analysis is already mostly done during the architectural analysis activities within the technology prototype process block. During feasibility analysis, an overall analysis in terms of the augmentation of the design into the product architecture is conducted to understand the nature of changes involved for the existing product. These changes can be to the platform or to the application depending on the context of implementation.

- Gap analysis: Gap analysis will help understand the delta of all the changes required to implement the POC.

- Impact Analysis: Impact analysis will help understand the risks and efforts involved for the delta identified during gap analysis.

3.3) Impact on existing milestone : project Impact analysis is based on the complexity of implementation as well as the effort involved during implementing the same. In effect, during project impact analysis, the effort is evaluated against the exiting milestones (even more important to the functional implementations).

4. Executive Committee Sign-off

Executive Committee sign-off is a discipline enforced for high cost, high impact project implementations. This discipline is exceptional in nature and will be exercised based on a threshold set for the costs, efforts, complexity, or delta etc.

This discipline ensures that appropriate visibility for high impact projects (POC implementation) are executed so that the executive decision makers can sign off on any deviations from the current plans, release, or technology.

Mostly, the sign-offs are required for project deviations, project cost/effort, or any other thresholds set as per executive will.

5. Technology Implementation

The technology implementation discipline helps in realizing the signed-off POC within the product. Technology implementation disciplined is reached only if the given context of implementation is any of the following:

- Platform component implementation (change)

- Framework, library, toolkit

- Third party induction into product

- Technology Migrations (Based on technology roadmap)

- Tools development for internal use (productivity, deployability, configurations etc…)

Technology implementations will be governed by active architectural reviews of implementation to ensure proper design-to-code realizations of the proposed designs through POC. Architectural reviews and architecture assurance will be covered in detail later in the document. The following process flow helps emphasize the iterative nature of any implementation lifecycle.

The implementations are subjected to unit tests, feature tests (based on technology requirements), and tests specific to architecture quality that includes modularity, configurability, performance, scalability etc.

6. Technology Quality Assurance

Technology quality assurance is a discipline to establish quality for all the technology components released to the base platform. Technology quality assurance activities, in spirit, are no different from the functional quality assurance activities. The only key differentiator here is the nature of requirements and test cases that influences the quality assurance process.

Technology requirements specifications document and use cases will be used to feed the test cases specific to technology QA. The requirements will be generally for the features or functionality of a technology component such as framework, toolkit or library.

Activities: All/any activity adjudged and obvious to the QA process (as decided through PDLC) will be applicable within this process. QA activity will be iterative and regressive to maintain multiple bug cycles during the course of the project.

Artifacts: The input and output artifacts will also be specific to the QA process as decided by the PDLC. Bugs and issues will be logged using an appropriate tool.

Key Note: More specifically, unit tests and code coverage must have very high emphasis during QA of technology components released to the base platform. Also stringent performance, scalability and modularity requirements must be specified as the core requirements for all components that get released to the base platform. Generally the code coverage percentage, performance numbers and load capacity must be relatively higher for base platform components compared to application components.

7. Core Platform Release

Platform release process is a discipline to govern the components that gets release into the mainstream base platform. The release process ensures proper change control and configuration management for the components within the base platform. Platform release is applicable to all home grown components as well as 3rd party implementations.

Activities: All/any activities obvious to the release process (as decided by the PDLC) will also be applicable to the core platform release process.

Artifacts: The input and output artifacts will be specific to the QA process as decided by the PDLC.

Key Note: One of the core output artifacts for technology release is the ConfigMap updates of the base platform. Also relevant release notes and risks must be part of the output artifacts. The ConfigMap and risks list will be primary input artifacts for the “technology life-cycle management” discipline.

8. Technology Lifecycle Management

Technology lifecycle management is a discipline in which the base platform and technologies are serviced and maintained throughout the lifecycle of the related components. During lifecycle management, it becomes necessary to identify the primary owner of the relevant technology component throughout the lifecycle of the component. The lifecycle can be broadly broken down into the following three phases:

8.1) Technology Establishment

8.2) Technology Sustenance

8.3) Technology Sunsetting

8.1) Establishment : Within the establishment phase, one need to ensure that all the relevant documents, binaries, source and related artifacts are made available for the appropriate groups (engineering, PSG or partners) to perform business as usual. Typically, developer education activity (walkthroughs, workshops or simple white boarding) is the core concern during the establishment phase.

During this phase, the component that got released into the base platform will encounter a lot of hiccups when engineers and developers start using them for the first time within the product. Even though a comprehensive QA would have been performed on the components, there will probably be some good number of issues and bugs raised by engineers/developers. The primary focus while planning the establishment should be to ensure that the owners of the released components are dedicating there time for smoother inception of the components in any functional/application projects.

During this phase, it is important to establish a strict change management process for any changes requested by the developer community. It may not be necessary to implement all changes into the components immediately. Instead due diligence must be enforced only to feedback critical change request that were overseen during the development life-cycle of the component. Consolidated changes (after analysis) must be routed through further activities of the technology management process block.

Typically the establishment phase would last until the first core product release including the related technology components is made. Generally tag promotions must be used to identify the status of technology components. Mostly the end of establishment phase would promote the components to “production ready” or “established”.

8.2) Sustenance : The sustenance phase of the technology lifecycle is post establishment activity. The primary focus of the sustenance phase is to ensure continued maintenance of the components that were established into the product. Some of the core activities during the sustenance phase can be as follows:

- Timely resolutions of external bugs (customer bugs)

- External supplementary requirements gathering (Scalability, Performance, Extensibility, Usability, Configurability)

- Identify component enhancements

- Due diligence on CRs (Change requests)

- Consolidation and categorization of CRs, enhancements and other requirements for next version of component.

- Component version and release planning

- Inputs for component EOL (End-of-life) analysis

8.3) Sunsetting : The sun setting phase is a phase within which the components who’s EOL (End-Of-Life) been identified is eventually phased out of the product. This phase contains the most critical activities which ensure a smoother transition for the product during the component sunset.

The requirements build-up for what components needs to be identified for EOL, is part of the architecture roadmap management discipline. By that, what it means is that, the requirements can arise due to many factors or needs from any discipline, process, dimension or group, but the collection of these requirements and analyzing the requirements to objectively justify that the EOL is in fact necessary, will be part of architecture roadmap management.

The sun setting phase is an outcome of the decision already made during the roadmap management stage where EOL is tagged to the component. This phase will kick start further processes on how-to sunset the components. The what-next after sunset must be already identified during architecture roadmap management.

Wednesday, January 31, 2007

ESB Primer

The main objective of an ESB is to facilitate interactions across people, process, application and data. Apart from the integration enablers, ESB is also made up of components that provide intelligence for service integration such as interface transformation, service matching, QoS (transactions, security, addressing, messaging), service level monitoring and system monitoring. Apart from the stated pervasive services endowed by ESB, some of the prevalent service enablement that is possible through ESB are Composite Application Frameworks (CAF) and Business Process Choreography/Orchestration to achieve Business Process Integration (BPI).

CAF is an “execution shell” around applications supporting different business needs to provision an aggregate requirement by sharing the process of all these different applications. For example to achieve a quote-to-cash customer order management, an ERP will typically be integrated to a CRM system. This can be done using either the conventional integration strategies (direct integration) or through CAF. In the conventional itegration strategy, retiring any one of the integrated modules to bring in a newer module will break the overall composite environment. But in CAF execution shell, modules can be plug and played to an extent with comparatively minimal impact than the traditional methods. SeeBeyond’s ICAN suite, WebMethod’s CAF and SAP’s NetWeaver are some good CAF products available. The CAF may internally use a Business Process Choreography framework to enable flexible process orchestration across applications (ERP and CRM from the example) to enable adaptability across changing process components to support the business need (quote-to-cash from example). IBM’s Websphere Business Integration Server is an excellent business process orchestration and execution product available. The Websphere Business Integration Server uses a BPEL engine to achieve process choreography. The SOA and ESB are key enabling technologies for CAF and BPI.

Friday, January 05, 2007

SOA Primer

The software service in the SOA is a network endpoint abiding by certain protocols and technology constraints required by SOA. Typically web services technology is used to enable the network endpoints as a software service. The key features of SOA are loose coupling, distributed, application autonomy, programming language agnosticism, data and communication standardization, interface standardization and heterogeneity. All of these key features are enablers for achieving an IT ecosystem which acts as a virtualization layer to support and map the enterprise business objectives to the IT process boundaries.

Web services is a technology specification which details the usage of protocols, communication layers, data standards, network standards, data parsing and binding specifications and conversion standards for a service endpoint. These specifications aid in defining the service interface, publishing the interface and discovering the interface using the standards specified.

Interface definition is achieved through a standard called Web Services Definition Language (WSDL). The WSDL Interface publishing and discovery happens using the communication protocol named Simple Object Access Protocol (SOAP). The target for publishing is a standard named Universal Description, Discovery and Integration (UDDI). The publishing data and grammar is encoded using a markup language named eXtensible Markup Language (XML). Web services also proposes the Quality of Service (QoS) necessary across the web services stack supporting the transactions, security, messaging and addressing protocols for the service end points. Some of the other standards that are of interest are Business Process Execution Language (BPEL), Data Center Markup Language (DCML) and Web Services Composite Application Framework (WS-CAF).

Friday, December 15, 2006

The Fifth Utility

The idea of service provisioning for the clients based on similar grounds such as using electricity service or water, where in clients can consume as much of resources as needed and are only required to pay for what is being used, is considered as ‘Utility Computing’.

Whenever an operation or a service becomes an absolute necessity for an enterprise to function, and the abundance and standardization of such services drives the ubiquity of such service, then, it will make the most sense for an organization to obtain such services from an utility source. (Ex: Electricity)

Any process or offer that can be commoditized as a service can be packaged as ‘probable’ contender for utility computing.

In my view, the utility computing model implies that majority of the services that are ubiquitous across general consumption (e-commerce or enterprise alike) can probable be serviced through a utility computing paradigm.

Such services can then be consumed on a pay-by-the-drink usage model.

Technology (IT) in general can be considered as a usecase for utility computing. IT as a utility is a very highly debatable topic. I do want to suggest that IT as a whole does not fit into the utility model. IT services can be broadly categorized into two systems.

- Systems that provide strategic benefits for an enterprise.

- Systems, which are ubiquitous across enterprises.

The utility computing model best fits the second category of services. In my view, the utility computing model implies that majority of the services that are ubiquitous across enterprises can be outsourced. Such outsourced services can be used based on a pay-by-the-drink usage model. The idea of paying for using applications or compute resources or storage, based on the pay-by-the-drink model sounds great in theory.

‘But does it really work in practice?’

If the fifth utility was to be considered a ‘simulacrum’ then the probable originals could be the following market models:

- Search as a service (Google, Yahoo)

- Email, News and Hosting as a service (gmail, maps, MySpace, youTube)

- Blogging, FeedBurners

- CPI, CPA ad models (AdSense, Amazon Affiliate)

- Search Marketing, Media and Click Streaming (Akamai, Overture, DoubleClick)

- Online Storage (Amazon S3, Gmail plugins)

- Decentralization of data (Napster, Gnutella)

- Virtualization of systems

- Emergence of Web 2.0

The current focus seems to be more towards virtualization of systems as the first step towards utility computing stack. Notice that decentralization of ‘data’ through the usage of torrents or similar P2P networks (Ex: Naptster) is a way towards virtualization of data. Also the emergence of Web 2.0 is pushing the industry further towards ‘Web as a platform’ for provisioning the service. Web 2.0 in general and probably semantic web in particular does and will have more relevance towards offering the services as utility to be consumed over the web. (More about this in later blogs).

Note that SOA is a technology architecture to enable LOB applications to work within a composite application framework...

Meanwhile, there is a business side of the SOA and the definition of a 'Service' in the business model is more important than the definition of the 'Service' as explained everywhere while preaching SOA. (Check webservices and SOA keywords while searching for technical definition on Google).

What then makes up a business definition of a Service ?

I will present an over simplified version of the key constituents of a ‘Service’. I will call this the first-set

- Service Description

- Service Costing and Pricing

- Objectives of the Services (Also called Service Level Objectives)

- Service Level Agreements

- Service Usage and Consumption plan leading to a Service Offer

- A Master Service Agreement for a Portfolio of Services

- Service Metering (or monitoring)

- Service Lease Agreement

- Service Chargeback or Billing

- Regulatory Compliance

There are many frameworks such as ITIL, COBIT etc that deals with the concept of ‘IT as a service’. Most of these frameworks revolve around the first-set of drivers I have presented. The key theme of service provisioning is based on building a Service Catalog and a CMDB (Configuration Management DataBase). The ServiceCatalog and the CMDB in general is a dichotomy built around what they call as the “front office” and the “back office”.

Most of the front office activities are addressed by building a service catalog. This includes creating a service portal as a single pane of glass across functions such as:

- Help Desk and Requisition

- Self Service

- Auditing (for compliance)

- Financial Accounting

- Relationship Management

- Vendor Management (Service)

- Inventory Management

- Service Configuration management (CMDB, Bill-of-Materials)

- Capacity Management

- Availability Management

- Incident and Change Management

- Resource Workflows and System Integrations

- Vendor management (Configuration)

This simplification of the service definition also helps us understand that utility definition will not be any significantly different from the functions and operations management of a service, except that not all services are utilities.

In conclusion, the fifth utility as a simulacrum is still in the making, waiting for the Integration of front-office with the back office through the probable emergence of Web 2.0 based platforms which in turn makes the SOA and SOI to happen. In effect, for the pay-by-the-drink paradigm to work, the critical ingredients from the second-set to emerge as a product that best fits into the overall framework must become a reality.

Saying this, there are some successful emergence of utility based usage of Web Services demonstrated by Amazon on their AWS portal. These are some of the trends worth tracking for the simulacra to emerge.

Sunday, November 12, 2006

What is Simulacrum ?

Some context:

- Universe being a simulacrum without a original which recurres eternally.

- Simulacra of the beings where the copy is true without a model (model being a theory). This has branches of study around semiotics and biosemiotics.

- God is a Simulacrum.

- A-Life (Artifical Life) is a Simulacrum

"The simulacrum is never that conceals the truth -- it is the truth which conceals that there is none"

The simulacrum is true.

Thinking about it, my blog is a simulacrum of what I perceive, that technology being a simulacra, and is a self fulfilling prophecy of recurrence. So the title "The Simulacrum".

I hope to capture (to my ability and bandwidth that is) my thoughts and perceptions around the assertions, lemma or postulates (being the idealized original) of specific technologies and the simulacrum around the same. I am hoping to stick to this general frame of thought as much as possible during my blogs.

'The hope' being the idealized original prediction and 'the blog' being the simulacrum !

Sunday, July 23, 2006

Internet Security and Firewalls available on Amazon

Internet Security and Firewalls

Implementing a firewall is the firs step in securing your network. This book will show you how to construct an effective firewall and teach you other methods to protect your company network. Internet Security and Firewalls gives you the knowledge you need to keep your network safe and gain a competitive edge.