For several years and across several product development engagements, I have come across varied organizational structures and 'disparate' architecture and development processes that I thought I must reflect upon my own understanding of these ideologies and present a '

process simulacrum' that I have been able to assimilate over time. In essence, the simulacrum is based on varied development methodologies such as

RUP (Rational Unified Process),

Agile UP,

XP (eXtreme Programming),

SCRUM,

DSDM (Dynamic Systems Development Method) etc...

This 'process simulacrum' is not general purpose. In my mind, the process I will present in this article will be applicable to technical architecture process as against a overall functional PDLC.

(Caution: I have 'tried' to keep the article short, but have justified the definitions of some concepts, which makes it a rather longer version of a blog.)

This technical architecture process will emphasize the process workflows necessary to enable the “core platform” of a given product. The process will also help establish a “technology change management” practice within the product lifecycle. It will also accommodate any technical/technology POCs (Proof of Concept), early architectural spikes and prototypes required for the functional architecture of the product.

The overarching vision of this process simulacrum is to aid in objective evaluation of the architecture/technology in context so as to provide unbiased feedback to the organization to consider appropriate decisions during the overall product development roadmap.

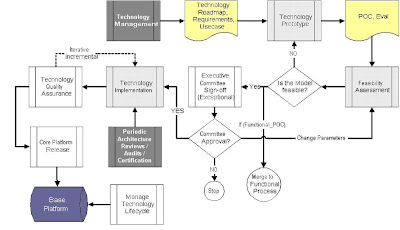

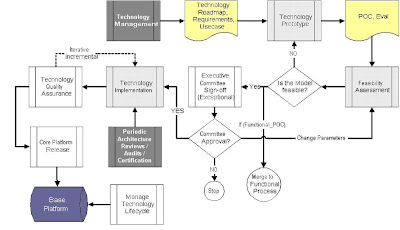

Let us start with a workflow diagram that denotes the flow of process as well as artifacts (mixed into one). I have kept the flow as simple as possible so that its easy to augment the process into both predict frameworks such as RUP as well a agile frameworks such as Xtreme Programming.

Going further the blocks within the workflows for the given process is explained in detail.

1. Technology ManagementThe charter for technology management includes:

- Deriving a technology roadmap

- Establishing the roadmap within the organization

- Seeing through the execution of the roadmap and

- To optimize the roadmap based on changing product and technology requirements.

A technology roadmap is different from an architecture roadmap. In it, a technology roadmap manages the external and internal (home grown) technologies that can be used to manufacture the product or aids in animating the product manufacturing process in itself. Typically a technology change management governor (or council in case of very large organizations) owns the responsibility of technology change management to drive the road map.

As an example, a technology roadmap is made up of:

- Technology compliance entities such as compliance for J2EE or .NET. (versions included)

- Third party integrations based on buy vs builds

- Migration paths such as Oracle 8i to Oracle 10g, J2SE 1.3 to J2SE 1.5 etc… (includes EOL derivations)

- Framework implementation milestones such as Struts, Spring, Hivemind, etc

- Tools implementation for roundtrip engineering, engineering performance, code coverage etc.

Technology roadmap is a subset of the overall architecture roadmap. In addition, the Architecture roadmap owns the conceptual entities specific to the overall functionality of the product:

- Specification compliance entities such as JSR168, JSR170.

- Meta standards compliance such as CIM, DCML etc.

- Concept/Process compliance such as SOA, MDA etc…

- Domain specific implementations such as CMDB/SMDB, Multi tenancy.

- Core enabling module milestones (relevant to platform) such as security kernels, web services, edge integration frameworks etc.

- Compliance architecture for regulatory standards such as SOX.

Core Activities of Technology Management includes:1.1) Technology Requirements Analysis (TRA)

1.2) Technology Lifecycle Management (TLM)

1.3) Deriving Technology Roadmap

1.4) Buy vs Build Analysis

1.1) Technology requirements analysis (TRA) : this activity derives the technology requirements necessary for the product. Also, a technology usecase (where relevant) must be derived within this activity. A technology usecase is different from a functional usecase. In a technology usecase the product use of technology is depicted (Example framework, libraries, toolkits) as against a functional usecase. Deriving the technology requirement for the product is part art and part science. This is because the requirements are heavily bounded by three influencing factors namely

- Business need and value add: The business need is the heaviest driver for the technology requirement. The requirement must always materialize into dollars earned or saved. Economic feasibility analysis is the key for validating the technology requirement against the business need. (Sub-Activity: Economic Feasibility Analysis)

- Market maturity of the technology: The next priority would be to understand the industry maturity and acceptance of the technology. A bleeding edge solution in most cases is always pre-mature to implement. And not all legacies are always meant for a sunset. Understanding the market progress and industry acceptance for timing the technology is always a balancing act. (Sub-Activity: Market Maturity Analysis)

- Product readiness for the technology: Lastly the product readiness to implement the technology within the product needs to be established as a part of the process for validating the technology requirement. (Sub-Activity: Product Readiness Analysis)

1.2) Technology Lifecycle Management (TLM) : Technology life-cycle management includes the management of the existing technology components within the core platform of the product. This includes maintenance and optimizations as well as migrations and upgrades. This activity also manages a config map (or an inventory) of the current technologies used within the product and the respective status of the technologies. Example of technology status could be “marked for migration”, “eol identified”, “current”, “legacy” etc… [I have a separate block and a section dedicated to this activity. Depicted in the diagram as 'Manage Technology Lifecycle']

1.3) Deriving the Technology Roadmap : As stated this is one of the core activities of technology management. Here a representation of the technology implementations, upgrades, migrations, and EOL (end of life) of the technology within the organization will be depicted based on a milestone within the roadmap. Mostly the roadmap will be driven through the technology requirement validated through the requirements analysis process. One of the other key input criteria for the technology roadmap is the product roadmap. The product roadmap acts a driver for the technology roadmap to validate the milestones for technology change.

1.4) Buy vs Build : One another important activity of technology management is to perform a buy vs build analysis for the product. This analysis can kick start a POC effort which emerges out of technology management discipline. The POC effort is depicted as a process stream within the technical architecture process workflows.2. Technology PrototypeThe technology prototype is a discipline or a process that enables activities for accomplishing architectural spikes or Proof of Concepts necessary to evaluate technology contenders (or architectural component) based on objective validations. The prototyping will be context specific and will be based on the nature of the POC required. The following are some case examples of the nature of prototypes:

- POC for a third party framework such as Spring or Hibernate.

- POC for a design threaded off from a functional implementation

- POC for a architectural component for platform services such as audit library, event managers

- POC for a tool such as code generators, transformers etc.

The purpose of a technology prototype discipline is to take the technology and product requirement closer to reality through objective evaluation of the concept through prototypical implementations. Also, the technology prototype discipline provides a controlled environment to fail within the given context bounds so as to evaluate the risks of implementation of a specific functional component or a technology component that gets embedded into the product. A prototype enables better control for risk avoidance/mitigation and reduces grave design flaws that may creep into the architecture.

Core activities of Technology Prototype includes:2.1) Concept Analysis

2.2) Architecture Analysis

2.3) POC Construction

2.4) POC Documentation

2.1) Concept Analysis : First level theoretical evaluation for the POC implementation spawning the appropriate context for POC implementation. This phase is more to rationalize the scope for POC so as to not get carried away or overwhelmed with requirements not germane to establish necessary objectives. This activity will also help rationalize if the POC is necessary in the first place.

2.2) Architecture Analysis : once a context and a rationale are established through concept analysis, it becomes necessary to analyze multiple styles of architectural designs. This activity is more iterative and incremental in nature. Architectural analysis at the prototype stage tends to be driven by an “Agile process” to accommodate quick success criteria to choose a specific design (or a couple of designs) to implement in the POC construction phase.

2.3) POC Construction : POC construction is also iterative and incremental in nature, more so driven by an agile process to evaluate the best design for a given requirement. The POC construction will implement multiple architectural designs and will concentrate on quickly throwing away bad designs in the process.

2.4) POC Documentation : POC documentation is the most important activity throughout the prototype discipline. All the decisions starting from concept analysis through construction must be well documented to understand the merits of the decisions. It is very much necessary to justify the decisions through documenting the pros/cons, merits/demerits, implications, risks/mitigation and trade-offs made while arriving at a decision. Any compromise on the documentation will either prove costly at the later stages due to lack of understanding of a decision, or will expend unnecessary effort to recursively re-justify the decisions through unproductive means.

3. Feasibility AssessmentThe feasibility assessment stage is to assess the feasibility and viability of the POC. This assessment is different from the feasibilities done at the technology management process block level. POC feasibility is done within the context of the architecture feasibility or the design model feasibility used within the POC from a purely technical angle. This stage is iterative in nature. When a given model is considered not viable for implementation, quickly the model is thrown away and a better design is synthesized for consideration. Feasibility assessment is also incremental in nature.

For example, during POC feasibility analysis, the POC conclusions are carefully evaluated to understand the trade-offs and merits with respect to the:

- Does complexity affect the deadlines (Design Patterns, Architectural Style, Algorithmic)

- Risks involved and mitigation strategies.

- Product architecture readiness to augment the design under evaluation (architectural integrity).

- Is there any platform design changes required?

- Is there any functional design changes required?

- Cost of implementations including effort per resource type and cost of technology (tools etc.)

Core activities of Feasibility Assessment includes:3.1) Assess the Complexity of POC.

3.2) Assess the effort of implementation.

3.3) Assess the impact of existing milestone.

3.1) Complexity of POC : Complexity analysis is already mostly done during the architectural analysis activities within the technology prototype process block. During feasibility analysis, an overall analysis in terms of the augmentation of the design into the product architecture is conducted to understand the nature of changes involved for the existing product. These changes can be to the platform or to the application depending on the context of implementation.

- Gap analysis: Gap analysis will help understand the delta of all the changes required to implement the POC.

- Impact Analysis: Impact analysis will help understand the risks and efforts involved for the delta identified during gap analysis.

3.2) Implementation Effort : POC usually tends to be a quick prototype to establish an objective success criterion for a given technology contender to be implemented within the product. During feasibility assessment, it becomes necessary to project the efforts to get the real work done during the course of implementation into the product. Effort estimation is important to assess the cost of implementation. Effort estimates are directly proportional to the complexity of implementation.

3.3) Impact on existing milestone : project Impact analysis is based on the complexity of implementation as well as the effort involved during implementing the same. In effect, during project impact analysis, the effort is evaluated against the exiting milestones (even more important to the functional implementations).

4. Executive Committee Sign-offExecutive Committee sign-off is a discipline enforced for high cost, high impact project implementations. This discipline is exceptional in nature and will be exercised based on a threshold set for the costs, efforts, complexity, or delta etc.

This discipline ensures that appropriate visibility for high impact projects (POC implementation) are executed so that the executive decision makers can sign off on any deviations from the current plans, release, or technology.

Mostly, the sign-offs are required for project deviations, project cost/effort, or any other thresholds set as per executive will.

5. Technology ImplementationThe technology implementation discipline helps in realizing the signed-off POC within the product. Technology implementation disciplined is reached only if the given context of implementation is any of the following:

- Platform component implementation (change)

- Framework, library, toolkit

- Third party induction into product

- Technology Migrations (Based on technology roadmap)

- Tools development for internal use (productivity, deployability, configurations etc…)

Technology implementation will be governed by the same principles that are applicable to all other implementation (construction) projects as well. The principles of iterative and incremental development, quality assurance and iterative release cycles are fully applicable here

Technology implementations will be governed by active architectural reviews of implementation to ensure proper design-to-code realizations of the proposed designs through POC. Architectural reviews and architecture assurance will be covered in detail later in the document. The following process flow helps emphasize the iterative nature of any implementation lifecycle.

Note: the implementations are influenced by “change requests” raised during periodic architecture reviews as well as “bugs/issue” found during quality assurance cycles

The implementations are subjected to unit tests, feature tests (based on technology requirements), and tests specific to architecture quality that includes modularity, configurability, performance, scalability etc.

6. Technology Quality AssuranceTechnology quality assurance is a discipline to establish quality for all the technology components released to the base platform. Technology quality assurance activities, in spirit, are no different from the functional quality assurance activities. The only key differentiator here is the nature of requirements and test cases that influences the quality assurance process.

Technology requirements specifications document and use cases will be used to feed the test cases specific to technology QA. The requirements will be generally for the features or functionality of a technology component such as framework, toolkit or library.

Activities: All/any activity adjudged and obvious to the QA process (as decided through PDLC) will be applicable within this process. QA activity will be iterative and regressive to maintain multiple bug cycles during the course of the project.

Artifacts: The input and output artifacts will also be specific to the QA process as decided by the PDLC. Bugs and issues will be logged using an appropriate tool.

Key Note: More specifically, unit tests and code coverage must have very high emphasis during QA of technology components released to the base platform. Also stringent performance, scalability and modularity requirements must be specified as the core requirements for all components that get released to the base platform. Generally the code coverage percentage, performance numbers and load capacity must be relatively higher for base platform components compared to application components.

7. Core Platform ReleasePlatform release process is a discipline to govern the components that gets release into the mainstream base platform. The release process ensures proper change control and configuration management for the components within the base platform. Platform release is applicable to all home grown components as well as 3rd party implementations.

Activities: All/any activities obvious to the release process (as decided by the PDLC) will also be applicable to the core platform release process.

Artifacts: The input and output artifacts will be specific to the QA process as decided by the PDLC.

Key Note: One of the core output artifacts for technology release is the ConfigMap updates of the base platform. Also relevant release notes and risks must be part of the output artifacts. The ConfigMap and risks list will be primary input artifacts for the “technology life-cycle management” discipline.

8. Technology Lifecycle ManagementTechnology lifecycle management is a discipline in which the base platform and technologies are serviced and maintained throughout the lifecycle of the related components. During lifecycle management, it becomes necessary to identify the primary owner of the relevant technology component throughout the lifecycle of the component. The lifecycle can be broadly broken down into the following three phases:

8.1) Technology Establishment

8.2) Technology Sustenance

8.3) Technology Sunsetting

8.1) Establishment : Within the establishment phase, one need to ensure that all the relevant documents, binaries, source and related artifacts are made available for the appropriate groups (engineering, PSG or partners) to perform business as usual. Typically, developer education activity (walkthroughs, workshops or simple white boarding) is the core concern during the establishment phase.

During this phase, the component that got released into the base platform will encounter a lot of hiccups when engineers and developers start using them for the first time within the product. Even though a comprehensive QA would have been performed on the components, there will probably be some good number of issues and bugs raised by engineers/developers. The primary focus while planning the establishment should be to ensure that the owners of the released components are dedicating there time for smoother inception of the components in any functional/application projects.

During this phase, it is important to establish a strict change management process for any changes requested by the developer community. It may not be necessary to implement all changes into the components immediately. Instead due diligence must be enforced only to feedback critical change request that were overseen during the development life-cycle of the component. Consolidated changes (after analysis) must be routed through further activities of the technology management process block.

Note: Lifecycle management is a sub-process/activity of the Technology Management disciplineTypically the establishment phase would last until the first core product release including the related technology components is made. Generally tag promotions must be used to identify the status of technology components. Mostly the end of establishment phase would promote the components to “production ready” or “established”.

8.2) Sustenance : The sustenance phase of the technology lifecycle is post establishment activity. The primary focus of the sustenance phase is to ensure continued maintenance of the components that were established into the product. Some of the core activities during the sustenance phase can be as follows:

- Timely resolutions of external bugs (customer bugs)

- External supplementary requirements gathering (Scalability, Performance, Extensibility, Usability, Configurability)

- Identify component enhancements

- Due diligence on CRs (Change requests)

- Consolidation and categorization of CRs, enhancements and other requirements for next version of component.

- Component version and release planning

- Inputs for component EOL (End-of-life) analysis

There can be multiple minor releases during sustenance but a major release must be fed back through the activities of technology management. Major releases should come through the technology process lifecycle. Deviations of the technology architecture process are quite possible in these scenarios.

8.3) Sunsetting : The sun setting phase is a phase within which the components who’s EOL (End-Of-Life) been identified is eventually phased out of the product. This phase contains the most critical activities which ensure a smoother transition for the product during the component sunset.

The requirements build-up for what components needs to be identified for EOL, is part of the architecture roadmap management discipline. By that, what it means is that, the requirements can arise due to many factors or needs from any discipline, process, dimension or group, but the collection of these requirements and analyzing the requirements to objectively justify that the EOL is in fact necessary, will be part of architecture roadmap management.

The sun setting phase is an outcome of the decision already made during the roadmap management stage where EOL is tagged to the component. This phase will kick start further processes on how-to sunset the components. The what-next after sunset must be already identified during architecture roadmap management.